Data flow

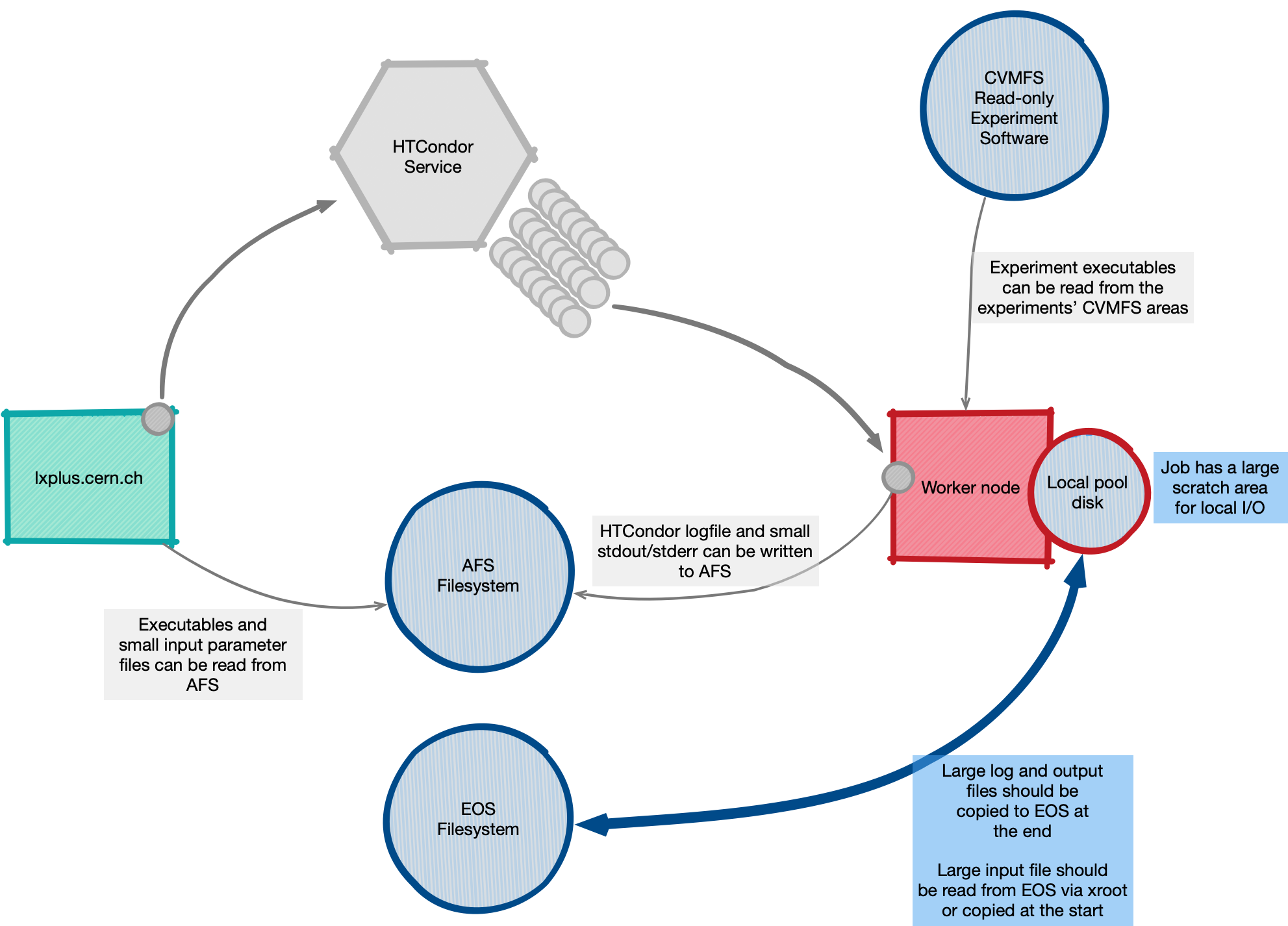

The diagram below shows the various data flows for the batch system as they are currently used.

Local jobs are typically submitted from the lxplus.cern.ch Interactive Linux Service. This has access to two shared file systems,

AFS and EOS. The batch system can also see both filesystems. The job is run on the worker node as the user that submitted it, and the running

job is given the necessary tokens (Kerberos) to read the user's files from these filesystems.

Both Lxplus and LxBatch can also read the CVMFS read-only filesystem, which contains experiment software trees (typically curated by the experiment software experts).

Finally, the local pool directory is the current working directory that the job starts in and is deleted once the job has finished. It is located in the local disk of the worker node where the job is executed, so I/O performance from it should be high.

Input files

The executable for a job can be taken from 3 places:

- CVMFS (read-only) file-systems. This is preferred for larger experiments.

- A path in the AFS filesystem

- A path in the EOS filesystem

(Small) job parameter files are often read from either AFS or EOS. See the examples later on how this is typically done.

Large input files should be read directly from EOS via the xroot protocol, or copied over from EOS to the local pool area of the job. The latter can be accomplished manually from the job executable or, even better, using the HTCondor xrootd file transfer plugin. Moreover, the new (experimental) EosSubmit schedds will automatically use the indicated xrootd plugin for files located at EOS.

Output files

Large output files should be written to the local pool directory and copied to EOS at the end of the job. As with the input files, this copy can be performed manually from within the job executable (see Big files for an example), explicitely configuring the HTCondor xroot file transfer plugin in the submit file, or using the new (experimental) EosSubmit schedds.

The pool space available is set by WLCG at 20 GB per processing core, and CERN purchases machines with this ratio. If you need more than 20 GB per core, please ensure you request more cores to avoid your job being killed for using too much disk.

Writing directly to AFS or EOS (POSIX-like) from the executables is not recommended (performance will be probably lower and the risk of failure higher).

Temporary space

HTCondor bind-mounts /tmp and /var/tmp into subdirectories of your pool space, so these are not separate filesystems and are subject to the same conditions as other files in your pool directory (20GB limit overall and cleaned at the end of the job).

The TMPDIR environment variable is defined, pointing to the location of /tmp.

Spool submission

CERN HTCondor jobs normally make use of AFS as a shared filesystem between the user submitting machine, the schedd and the final execution node. However, intensive use of AFS may cause intestability and sometimes problems (exhaution of quota, reaching the maximum number of files in a directory, etc.).

To avoid this, users can use the spool mode. This is accomplished by using the -spool command with the

condor_submit command. In this way of working, input files are asynchronously copied by HTCondor from the submit node

to the schedd service, then from there to the execution node. There is no use of a shared filesystem. The user log file

is also written in the schedd local filesystem rather than using the shared filesystem.

Output files are copied to the schedd once the job is completed, and the user can retrieve them (copy them to the

submit machine), together with the log file, by actively executing condor_transfer_data $JOBID (where $JOBID represents the condor job ID, e.g.

JOBID=123456789.0).

Notice that the spooled data is kept at the schedd for certain period of time, which is minimum of the following 3 options:

- Until you do

condor_transfer_data. - Until you do

condor_rm. - 10 days (or less if the schedd spool directory fills up).

Note

N.B.: input/output files copied to/from the schedd are limited to 1 GB. For larger files, please see recommendation number 2 below.

Let us also indicate that there are certain schedds that accept only spool jobs and should thus be especially stable (but apply more restrictive contraints). They are described here.

While spool submissions may help overcome AFS issues, it also causes pressure in the schedds. In particular, if jobs

transfer large amounts of files, they may fill the schedd's disk space. In order to help alleviate this problems,

please always ensure that you always set the transfer_input_files and transfer_output_files attributes in your

submit files for spool jobs (it is good practice for non-spool jobs also). This prevents transferring back potentially

numerous undesired files produced by the jobs.

N.B. Schedds will reject spool jobs that not set at least the transfer_output_files attribute.

Alternatively, as indicated above, consider transfer input/output files directly between storage service (EOS) and execution nodes using the HTCondor xrootd file transfer plugin. This is preferred for large workloads since it avoids the schedd altogether. It can be achieved by explicit setting in the submit file, or automatically when transferring to/from EOS and using the new (experimental) EosSubmit schedds.