Kerberos in HTCondor

Kerberos plays an important role while launching jobs in our HTCondor cluster. It is used at different stages of the job life-cycle for two main purposes:

- To authenticate users against the cluster while doing operations.

- When the job is running, to allow access to external services from within the job. External services such as

AFSorEOSare accessed with Kerberos.

Authentication against the cluster

Authenticating against the cluster to perform operations is trivial and it doesn't differ from any other standard kerberos protected service. Users must ensure valid kerberos credentials are present before invoking the commands. The validity of the credentials can be checked with klist.

fernandl@aiadm51:~$ klist

Ticket cache: FILE:/tmp/krb5cc_107352_TE4huqx3Gs

Default principal: fernandl@CERN.CH

Valid starting Expires Service principal

08/01/18 11:46:46 09/01/18 12:46:35 krbtgt/CERN.CH@CERN.CH

renew until 12/01/18 19:11:29

08/01/18 11:46:46 09/01/18 12:46:35 afs/cern.ch@CERN.CH

renew until 12/01/18 19:11:29

fernandl@aiadm51:~$ condor_q

-- Schedd: bigbird12.cern.ch : <137.138.120.116:9618?... @ 01/08/18 11:59:47

OWNER BATCH_NAME SUBMITTED DONE RUN IDLE HOLD TOTAL JOB_IDS

0 jobs; 0 completed, 0 removed, 0 idle, 0 running, 0 held, 0 suspended

fernandl@aiadm51:~$

If the credentials are not valid authentication won't pass and operations will

fail. This can be solved by running kinit to renew the current invalid

credentials:

fernandl@aiadm51:~$ condor_q

Error:

Extra Info: You probably saw this error because the condor_schedd is not

running on the machine you are trying to query. If the condor_schedd is not

running, the Condor system will not be able to find an address and port to

connect to and satisfy this request. Please make sure the Condor daemons are

running and try again.

Extra Info: If the condor_schedd is running on the machine you are trying to

query and you still see the error, the most likely cause is that you have

setup a personal Condor, you have not defined SCHEDD_NAME in your

condor_config file, and something is wrong with your SCHEDD_ADDRESS_FILE

setting. You must define either or both of those settings in your config

file, or you must use the -name option to condor_q. Please see the Condor

manual for details on SCHEDD_NAME and SCHEDD_ADDRESS_FILE.

fernandl@aiadm51:~$ kinit

Password for fernandl@CERN.CH:

fernandl@aiadm51:~$ condor_q

-- Schedd: bigbird12.cern.ch : <137.138.120.116:9618?... @ 01/08/18 12:07:04

OWNER BATCH_NAME SUBMITTED DONE RUN IDLE HOLD TOTAL JOB_IDS

0 jobs; 0 completed, 0 removed, 0 idle, 0 running, 0 held, 0 suspended

Authentication within the job

Authentication from within the jobs is more complex as the cluster must ensure the credentials are valid while the job is running.

CERN HTCondor instance is configured to keep Kerberos tokens up to date, as well as AFS tokens (via aklog) and EOS FUSE authentication using eosfusebind command.

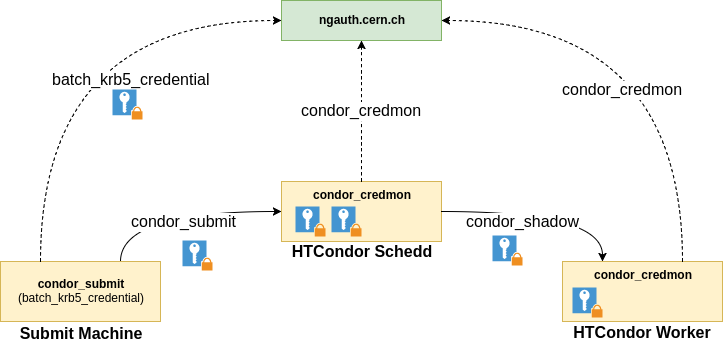

The following picture shows the main interactions between all the components to ensure these credentials are always valid in the jobs:

-

The submit machines (lxplus, aiadm,...) are configured to automatically call

batch_krb5_credential. This command generates a block of information containing user's credentials and a special token provided by ngauth.cern.ch. This token allows HTCondor to generate the kerberos ticket on behalf of the user. -

HTCondor schedds use the credentials received to initialize the job log in AFS. Additionally, the credentials are stored while the user has jobs running on the system. The credentials are stored as they will be required by the workers when the job is running. To ensure credentials are valid during the job life-cycle, a service called

condor_credmonrenews credentials periodically and, if not possible, talks to ngauth.cern.ch with the token provided at submission time to issue a new Kerberos ticket. -

Finally, when the job runs on the worker, the schedd sends the credentials to the worker, who stores them in a similar fashion. Again,

condor_credmonis responsible for keeping them up to date as well as requesting AFS & EOS tokens.

In order to debug if all these mechanics are working as expected, a user can run commands like tokens or klist -f from the jobs to ensure that the AFS and Kerberos environment is properly set.